The Symmetric Nearest Neighbor Filter (SNN) is a non-linear edge preserving image processing filter. It is a very effective noise reduction technique that removes noise while maintaining sharp image edges. This graphic filter produce results very close to these of Median and Kuwahara filters.

Applications

Some of the most popular applications of the symmetric nearest neighbor filter are :

- Computer vision – due to its property to well preserve image contours it is useful for edge detection

- Painting effect – its ability to remove textures and sharpen the edges of photos makes it suitable for creating oil painting effects.

You can use our free online image analysis tool to test with different algorithms against your own images and custom parameters.

How Symmetric NN Filter works?

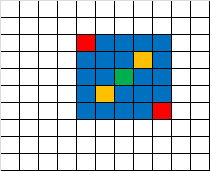

Similar to the other image processing filters, the Symmetric NN filter works using a sliding window at each image pixel. At each position, we split the pixels under the window into pairs of opposite points.

Each of these pairs we compare with the central pixel and we use the closest value as an overall window sum. Similar to mean filter, the resulting pixel value is the calculated average.

green – central pixel; red – opposite pixels

Source code

For fast filtering we propose a GPU/WebGL version of Symmetric Nearest Neighbor.

precision mediump float;

// our texture

uniform sampler2D u_image;

uniform vec2 u_textureSize;

uniform int u_pixelsCount;

#define KERNEL_SIZE %kernelSize%

#define HALF_SIZE (KERNEL_SIZE / 2)

void main() {

vec2 textCoord = gl_FragCoord.xy / u_textureSize;

vec2 onePixel = vec2(1.0, 1.0) / u_textureSize;

vec4 meanColor = vec4(0);

vec4 v = texture2D(u_image, textCoord);

int count = 0;

for (int y = 0; y <= HALF_SIZE; y++){

for (int x = -HALF_SIZE; x <= HALF_SIZE; x++){

vec4 v1 = texture2D(u_image, textCoord + vec2(x, y) * onePixel);

vec4 v2 = texture2D(u_image, textCoord + vec2(-x, -y) * onePixel);

vec4 d1 = abs(v - v1);

vec4 d2 = abs(v - v2);

vec4 rv = vec4(((d1[0] < d2[0]) ? v1[0] : v2[0]),

((d1[1] < d2[1]) ? v1[1] : v2[1]),

((d1[2] < d2[2]) ? v1[2] : v2[2]),1);

meanColor += rv;

}

}

gl_FragColor = meanColor / float(u_pixelsCount);

}

Online Image Effects

The Symmetric Nearest Neighbor filter is part of our collection of online image processing tools. With them you can experiment with different image filters and achieve different photo effects.

Resources

- SubSurfWiki – Symmetric nearest neighbour filter

- Hall, M (2007). Smooth operator: smoothing seismic horizons and attributes. The Leading Edge 26 (1), January 2007, p16-20. http://dx.doi.org/10.1190/1.2431821